PoplarML - Deploy Models to Production

5

ADVERTISEMENT

-

Introduction:Effortlessly implement ML models using PoplarML, which is compatible with well-known frameworks and enables real-time inference.

-

Category:Other

-

Added on:Mar 07 2023

-

Monthly Visitors:0.0

-

Social & Email:

ADVERTISEMENT

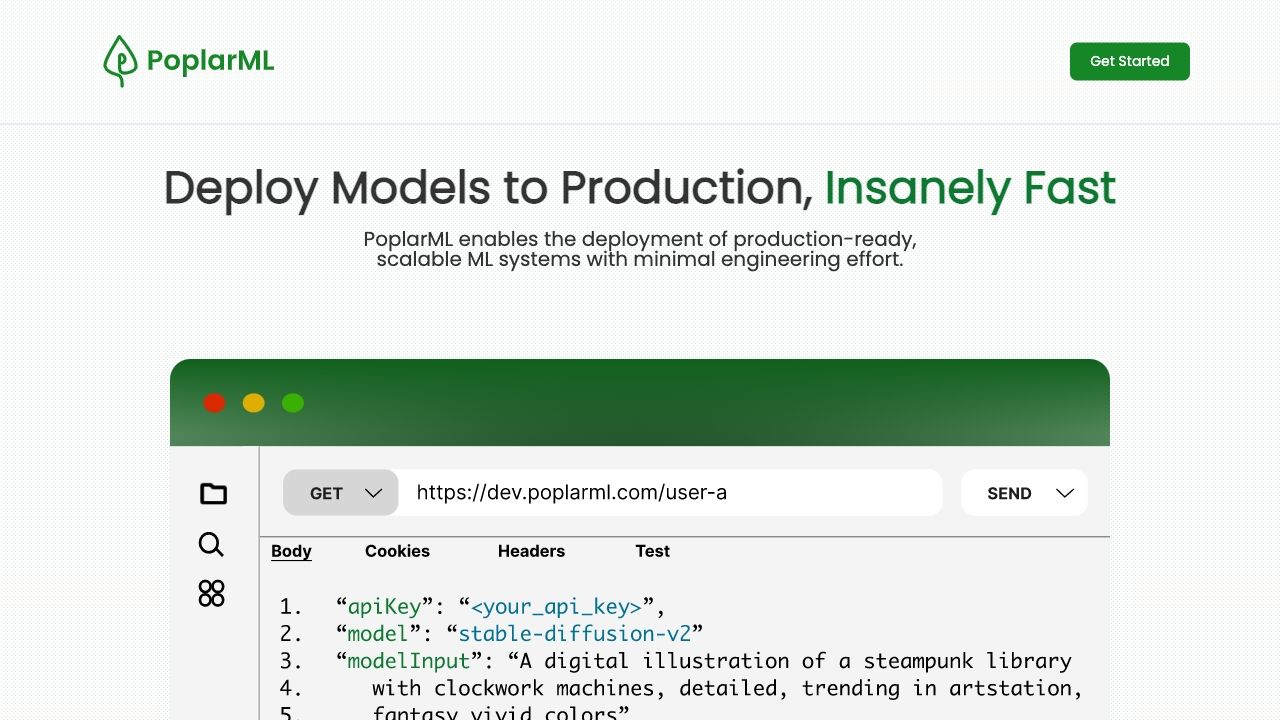

PoplarML - Deploy Models to Production: An Overview

PoplarML is an innovative platform designed to facilitate the deployment of production-ready and scalable machine learning (ML) systems with ease and efficiency. By minimizing the engineering workload, it empowers users to deploy their ML models seamlessly onto a fleet of GPUs. The platform supports leading machine learning frameworks including TensorFlow, PyTorch, and JAX, enabling users to access their models through a REST API for real-time inference.

PoplarML - Deploy Models to Production: Main Features

- Seamless deployment of ML models utilizing a command-line interface (CLI) tool to a fleet of GPUs.

- Real-time inference capabilities via a REST API endpoint.

- Framework agnostic, providing support for TensorFlow, PyTorch, and JAX models.

PoplarML - Deploy Models to Production: User Guide

- Get Started: Visit the PoplarML website and create an account.

- Deploy Models to Production: Utilize the CLI tool to deploy your ML models onto a fleet of GPUs, allowing PoplarML to manage scaling.

- Real-time Inference: Use the REST API endpoint to invoke your deployed model and receive real-time predictions.

- Framework Compatibility: Bring your models built in TensorFlow, PyTorch, or JAX, and let PoplarML handle the deployment process effortlessly.

PoplarML - Deploy Models to Production: User Reviews

- "PoplarML made it incredibly simple to deploy my models. The CLI tool is intuitive, and I was able to get everything up and running in no time!" - Alex D.

- "The real-time inference feature is a game-changer for my applications. I love how quickly I can get predictions." - Maria S.

- "As someone who works with multiple frameworks, I appreciate PoplarML's framework-agnostic approach. It saves me so much hassle!" - Jason T.

FAQ from PoplarML - Deploy Models to Production

What is the purpose of PoplarML?

PoplarML serves as a robust platform designed to facilitate the deployment of machine learning systems that are both scalable and ready for production, all while minimizing the engineering workload.

How can I get started with PoplarML?

To begin utilizing PoplarML, create an account on their website and leverage the Command Line Interface (CLI) tool to deploy your machine learning models onto a network of GPUs. You can access your models via a REST API for immediate inference.

What key functionalities does PoplarML offer?

PoplarML boasts essential functionalities such as effortless model deployment to GPU resources through a CLI, instant inference through REST API access, and compatibility with leading machine learning frameworks including TensorFlow, PyTorch, and JAX.

In what scenarios can PoplarML be effectively utilized?

PoplarML is ideal for scenarios involving the production deployment of machine learning models, scaling ML architectures with reduced engineering demands, enabling immediate inference for models in action, and accommodating a diverse range of machine learning frameworks.

Open Site

Latest Posts

More

-

Discover 10 Groundbreaking AI Image Generators Transforming ArtistryThe integration of artificial intelligence (AI) into various technological domains has fundamentally shifted how we approach content creation. One of the most exciting applications of AI today is in image generation. These AI tools can create highly detailed and realistic images, offering countless possibilities for digital artists, marketers, and developers. Below is an extensive exploration of 10 innovative AI image generators that you need to try, complete with the latest data and user feedback.

Discover 10 Groundbreaking AI Image Generators Transforming ArtistryThe integration of artificial intelligence (AI) into various technological domains has fundamentally shifted how we approach content creation. One of the most exciting applications of AI today is in image generation. These AI tools can create highly detailed and realistic images, offering countless possibilities for digital artists, marketers, and developers. Below is an extensive exploration of 10 innovative AI image generators that you need to try, complete with the latest data and user feedback. -

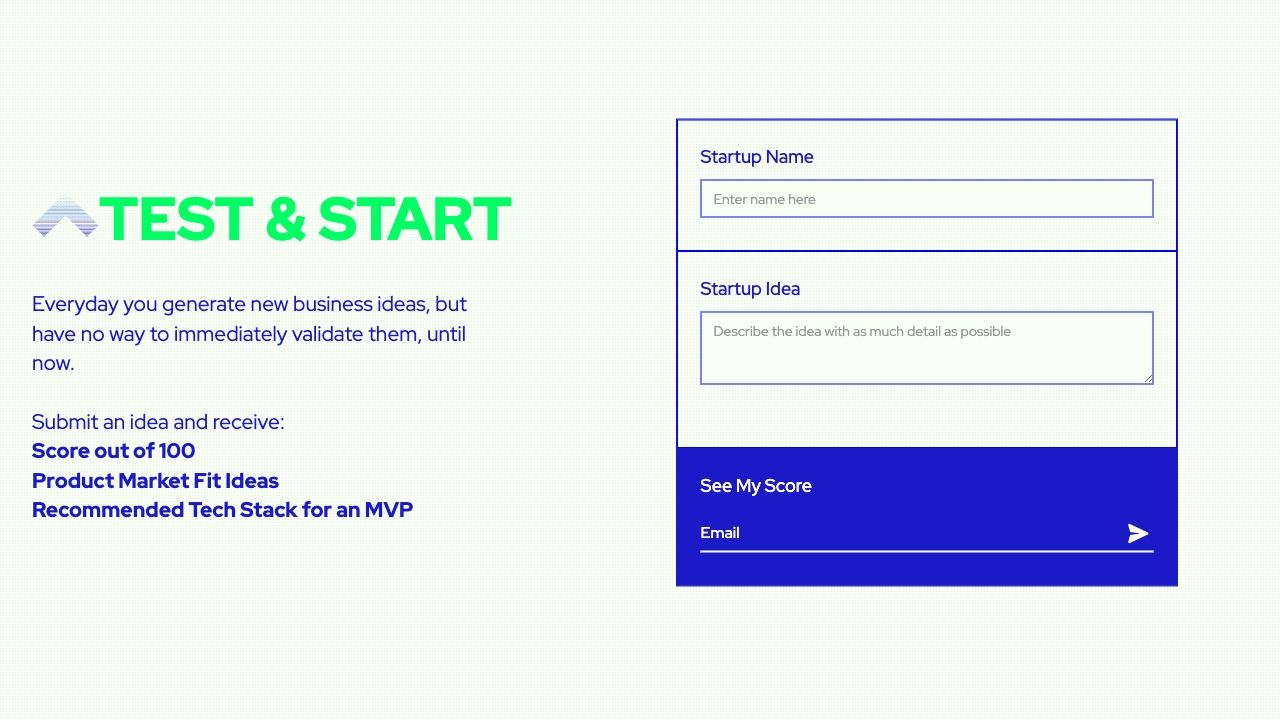

7 Game-Changing AI Tools to Transform Your Business Operations in 2024In the fast-paced world of business, staying ahead of the competition demands cutting-edge technology and innovative solutions. As we approach 2024, the integration of Artificial Intelligence (AI) tools has become an indispensable strategy for enhancing efficiency, increasing profitability, and streamlining operations. This article will introduce seven top AI business tools that can significantly boost your business operations in the upcoming year.

7 Game-Changing AI Tools to Transform Your Business Operations in 2024In the fast-paced world of business, staying ahead of the competition demands cutting-edge technology and innovative solutions. As we approach 2024, the integration of Artificial Intelligence (AI) tools has become an indispensable strategy for enhancing efficiency, increasing profitability, and streamlining operations. This article will introduce seven top AI business tools that can significantly boost your business operations in the upcoming year. -

Discover the Top AI Image Generators of 2024Artificial Intelligence (AI) continues to revolutionize various industries, including digital art and design. The advent of AI-powered image generators has opened up a world of possibilities for artists, designers, and content creators. These tools are not just for professionals; even hobbyists can now create stunning visuals with minimal effort. As we move into 2024, several AI image generators stand out with their advanced features, user-friendly interfaces, and impressive outputs. Here are our top picks for the best AI image generators of 2024, enriched with the latest data, expert insights, and real user reviews.

Discover the Top AI Image Generators of 2024Artificial Intelligence (AI) continues to revolutionize various industries, including digital art and design. The advent of AI-powered image generators has opened up a world of possibilities for artists, designers, and content creators. These tools are not just for professionals; even hobbyists can now create stunning visuals with minimal effort. As we move into 2024, several AI image generators stand out with their advanced features, user-friendly interfaces, and impressive outputs. Here are our top picks for the best AI image generators of 2024, enriched with the latest data, expert insights, and real user reviews. -

Top 8 AI Tools for Mastering Learning and EditingIn the fast-paced, digital-first world we live in, leveraging Artificial Intelligence (AI) tools has become crucial for enhancing learning and productivity. Whether you are a student trying to grasp complex concepts or a professional aiming to optimize your workflow, AI tools offer a myriad of features to help achieve your goals efficiently. Here, we present the best eight AI learning and editing tools for students and professionals, highlighting their unique features, user feedback, and practical applications.

Top 8 AI Tools for Mastering Learning and EditingIn the fast-paced, digital-first world we live in, leveraging Artificial Intelligence (AI) tools has become crucial for enhancing learning and productivity. Whether you are a student trying to grasp complex concepts or a professional aiming to optimize your workflow, AI tools offer a myriad of features to help achieve your goals efficiently. Here, we present the best eight AI learning and editing tools for students and professionals, highlighting their unique features, user feedback, and practical applications. -

Best 6 AI Marketing Tools to Skyrocket Your CampaignsIn the modern digital landscape, businesses continuously seek innovative methods to enhance their marketing campaigns and achieve substantial growth. The integration of artificial intelligence (AI) in marketing has revolutionized the way companies analyze data, understand their audience, and execute their strategies. Here, we explore the six best AI marketing tools that can dramatically elevate your marketing campaigns.

Best 6 AI Marketing Tools to Skyrocket Your CampaignsIn the modern digital landscape, businesses continuously seek innovative methods to enhance their marketing campaigns and achieve substantial growth. The integration of artificial intelligence (AI) in marketing has revolutionized the way companies analyze data, understand their audience, and execute their strategies. Here, we explore the six best AI marketing tools that can dramatically elevate your marketing campaigns. -

Top Speech-to-Text Apps for 2024As artificial intelligence (AI) continues to evolve, speech-to-text (STT) technology has seen significant advancements, streamlining various facets of both personal and professional communication. STT applications transform spoken language into written text, benefiting a wide range of users including journalists, business professionals, students, and individuals with disabilities. In this article, we will explore the top speech-to-text apps available in 2024, leveraging the latest data, features, and customer reviews to provide a comprehensive overview.

Top Speech-to-Text Apps for 2024As artificial intelligence (AI) continues to evolve, speech-to-text (STT) technology has seen significant advancements, streamlining various facets of both personal and professional communication. STT applications transform spoken language into written text, benefiting a wide range of users including journalists, business professionals, students, and individuals with disabilities. In this article, we will explore the top speech-to-text apps available in 2024, leveraging the latest data, features, and customer reviews to provide a comprehensive overview.